After years of thievery and plagiarism at the hands of AI, creators are finally giving these bots a taste of their own medicine. A newly developed AI-fighting tool called Nightshade (version 1.0), developed at the University of Chicago, is now available for artists to download on Windows PCs and Apple Silicon Macs as of Jan. 18.

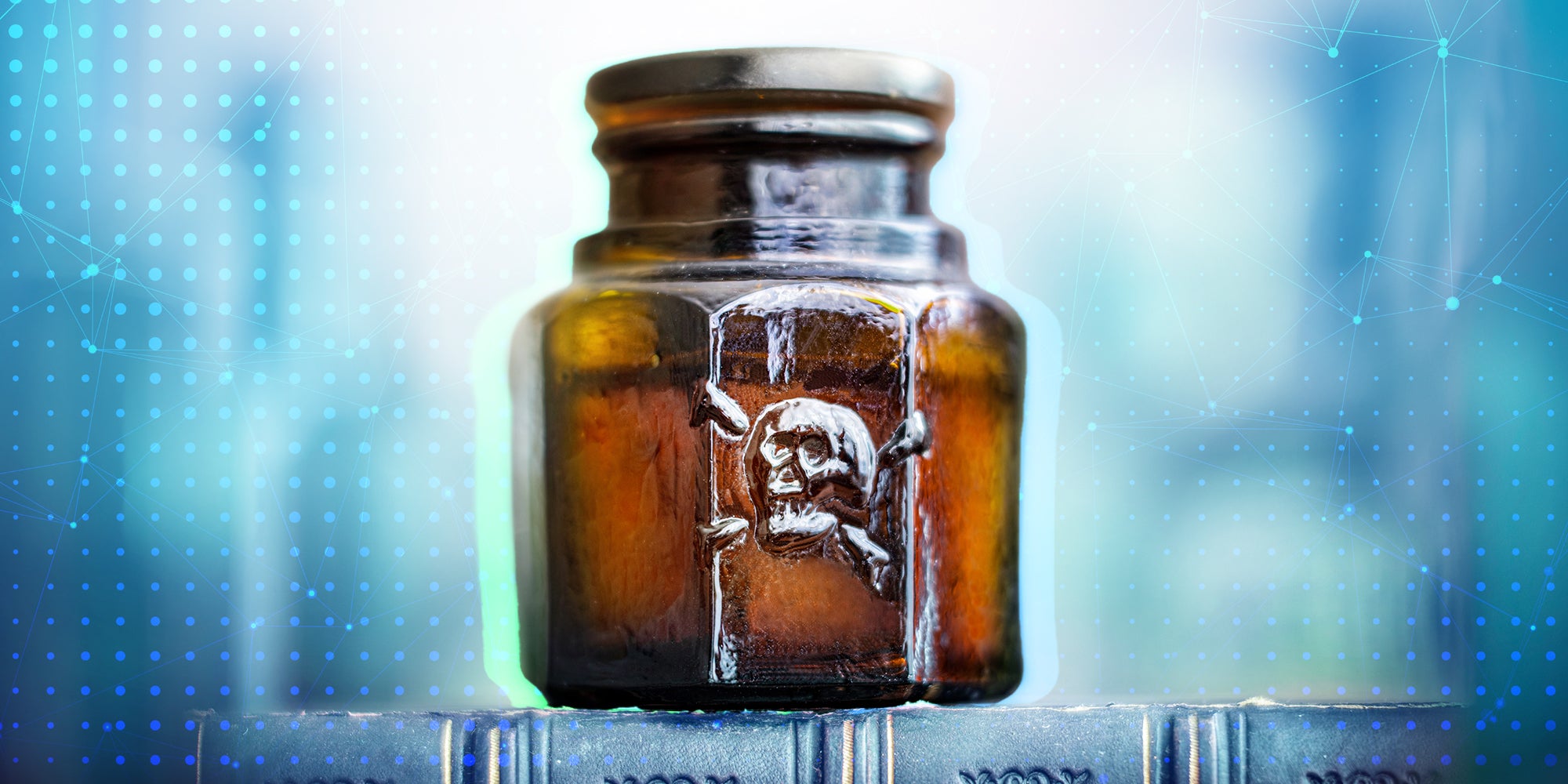

Nightshade has been in development for a while, but this latest version is now opening up the tool for the public. As reported by Passionfruit when the project was first announced in October 2023, Nightshade works by effectively ‘poisoning’ digital art at a pixel level. Once an image has been corrupted in this way, it’s no longer possible for AI to properly identify a drawing and duplicate it.

So, if you use Nightshade on a picture of a cat, for instance, the subtle, invisible changes made to the image will make it so AI software can no longer correctly identify said image. This means that, instead of identifying the picture as a cat, it will identify said picture as being a dog.

But why would artists want to do this? Well, for them, it restores some of the agency they have over their own work.

Researchers at the University of Chicago, who built this software, suggest this program can be used as a deterrent for unwanted data scraping.

“Nightshade can provide a powerful tool for content owners to protect their intellectual property against model trainers that disregard or ignore copyright notices, do-not-scrape/crawl directives, and opt-out lists,” they wrote in a statement.

The University of Chicago team of researchers also developed Glaze, another software first released in June 2023 that tricks AI programs into reading art in different styles than what is present — such as reading realism as abstract art. The team states on its website that Glaze is more of a defensive tool to prevent a creator’s art style from being mimicked, whereas Nightshade is offensive and intended to disrupt the training of AI models. The team recommends artists use both Glaze and Nightshade concurrently to protect their work.

And if social media is anything to go by, this vigilantism against AI thieves is already in progress. #Nightshade is rising in popularity on X, as artists from across the globe encourage one another to take some power back from the machines that have already taken so much from them.

It doesn’t even have to stop just art. Some creators suggest using it on photographs you’d share on social media to make them steal-proof. In theory, this could make it a lot harder for AI to use your likeness.

Some artists are also claiming that it could be illegal for AI companies to try to circumvent software like Nightshade and Glaze. But the truth is, as with most copyright law against AI art, there is no legal precedent for stuff like this.

As everyone takes the law into their own hands, the AI art world is really starting to feel like the Wild West.