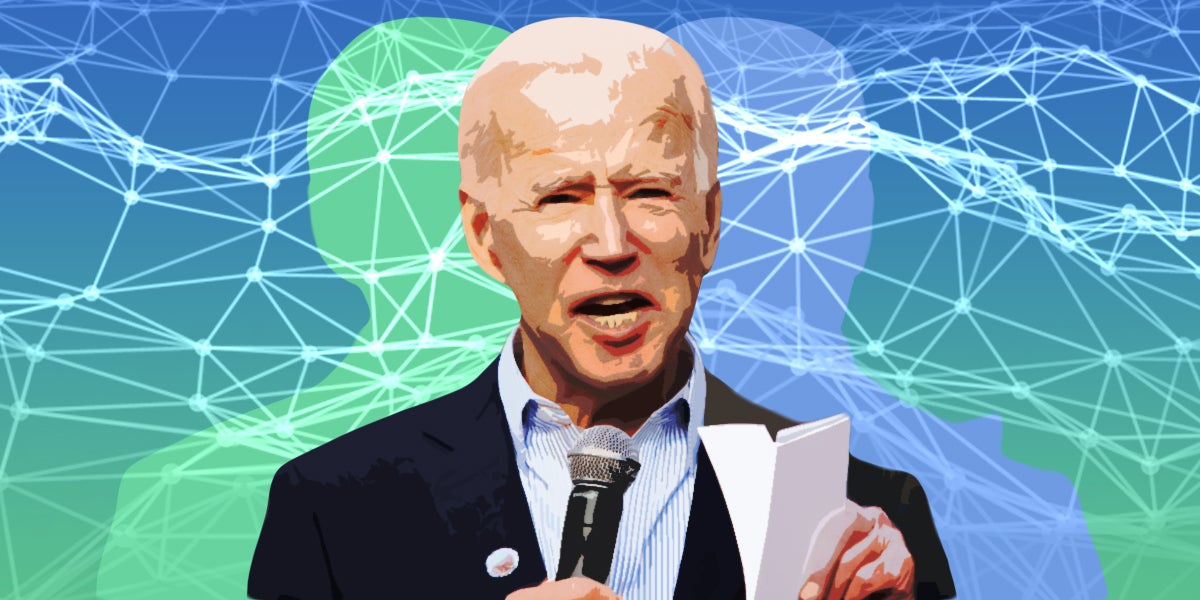

On Monday, President Joe Biden signed an executive order that lays the groundwork for regulation of artificial intelligence (AI) across nearly every industry and government. Additionally, Vice President Kamala Harris announced several new U.S. initiatives focused on safe and responsible use of AI at the Global Summit on AI Safety in the U.K. on Wednesday.

For some, the broad executive order is lacking and stops short of offering any fundamental policy changes. While the order builds upon voluntary commitments from companies earlier in the year and the non-binding Blueprint for an AI Bill of Rights, this latest exercise of power from the executive branch largely relies on action by federal agencies — such as the Federal Trade Commission, National Institutes of Standards and Technology, and the U.S. Patent and Trademark Office — to have any force or effect. It punts to Congress to draft and pass laws, get funding, and implement changes. And in practice, this executive order could be withdrawn or modified if there’s a change in administration in 2025 or 2029.

Still, one thing remains clear: Future implementations of this new AI regulation will have a profound impact on the creator economy — from creators to platforms and everyone in between. For creators, regulation of AI, particularly generative AI, has the potential to have as much of an impact as early internet regulations such as the DMCA or Section 230. And the executive order will have some immediate effects, with the executive branch’s Commerce Department spearheading a few key changes.

So if you’re a chronically online artist wanting to know more, here is a breakdown of three critical aspects of the executive order and their potential impact on digital creators.

Transparency and Trustworthiness

Under the executive order (EO, for short), platforms and developers of AI systems must share safety test results and related information with the U.S. government. The idea is that allowing government oversight of these components will ensure “safe, secure, and trustworthy” systems, much in the same way the Food and Drug Administration is granted oversight and regulatory authority for food and medicine that Americans consume. Under the Defense Production Act, the executive branch has the power to implement this regulation (the National Institute of Standards and Technology will be spearheading the charge).

Harris’s announcement includes the establishment of a U.S. AI Safety Institute which “will develop technical guidance that will be used by regulators considering rulemaking and enforcement on issues such as authenticating content created by humans, watermarking AI-generated content, identifying and mitigating against harmful algorithmic discrimination, ensuring transparency, and enabling the adoption of privacy-preserving AI, and would serve as a driver of the future workforce for safe and trusted AI.”

Implications of such oversight are numerous, including protecting audiences and consumers from potentially harmful or manipulative algorithms and content. The regulations could unlock a new layer of trust between platforms, creators, and audiences. However, it could potentially slow down a historically open internet with processes and policies for publishing content, regardless of whether it is synthetic or human-created.

Synthetic Content Detection

The creator economy is in the midst of a massive shift following the democratization of tools to produce synthetic content, also referred to as AI-generated or generative material. Many regulators are concerned about the proliferation of misinformation, deepfakes, and other types of harmful content from generative AI.

“Misinformation is one that is top of mind for me,” shared Aidan Gomez, an AI researcher and early contributor to Google’s Transformer project, in an interview with the Guardian this week. “These [AI] models can create media that is extremely convincing, very compelling, virtually indistinguishable from human-created text or images or media.”

Biden’s EO calls for standards and best practices that support the identification, detection, and tagging of generative AI material. For example, Adobe offers Content Credentials for metadata that identify applications used to create content and whether or not generative AI was involved. The executive branch’s Department of Commerce will be in charge of providing guidance on how to enforce watermarks and labeling.

Content disclosures may adversely impact some creators, potentially leading to backlash or criticism if creators are seen as using too much AI. However, with debates abounding over the lack of credit some creators receive when AI scrapes their art online, many artists will likely be happy to see others get called out for using AI services that don’t pay or credit artists. Not to mention, tracking content at the level of detail proposed in Biden’s EO could impact royalties and payments that platforms make — such as lower royalties for synthetic media or different treatment by recommendation algorithms.

For intellectual property issues, such as copyright and patents, the EO is incredibly light and doesn’t offer much of substance. It defers to the U.S. Copyright Office and its ongoing study on AI and, among other topics, the use of works for training, and requests that the U.S. Patent and Trademark Office issue guidance on inventorship and AI when it comes to registration of patents and examination of applications.

Privacy Preservation

Privacy is not a new issue for the creator economy. But AI developments have put the focus back on how platforms and AI developers treat the privacy of individuals. The EO seeks to ensure that privacy-preserving techniques are at the forefront of any AI development, deployment, and use.

For creators, the use of their voice, image, and likeness (for example, to power an AI fan-focused chatbot or an AI-generated song) becomes a critical area of focus for regulation. Companies are now under significant scrutiny when it comes to how they are using people’s personal data in connection with these AI systems.

The realities of the current state of Congress make it a long shot that federal privacy legislation will be passed anytime soon. Instead, we’ll continue facing state-by-state implementations that are variations on existing laws already in place across the European Union and California.

But the Biden administration is clearly aiming to balance the need for regulation in the AI space without stifling innovation or sending business overseas. It isn’t likely that the recent announcements will stop the recent rapid growth of AI in the creator industry.

While increased regulation could impact the ability of creators to continue using many of these tools and technologies, it could also lead to stronger user trust in platforms, algorithms, content, and creators. And if consumers feel that they can trust what they’re seeing on social media, then advertisers will see the ecosystem as reputable and safe — and creators could profit.

Do you have a clue about our next big story? Email tips@passionfru.it to send us your newsworthy information.