If there was a word of the year, it would almost definitely be AI. Much like cryptocurrency and NFTs last year, it feels like artificial intelligence and the role it could play in the creator economy are inescapable.

In fact, a new survey entitled “AI in Influencer Marketing 2023” suggests that creators are embracing it, as 52% of creators surveyed admitted to incorporating AI in their work. 71% of them claim they see AI as an opportunity for growth rather than a threat to the industry.

But are fully virtual influencers a step too far? That is a question all of us must ask ourselves as new software makes it feasible to turn images into full-on videos.

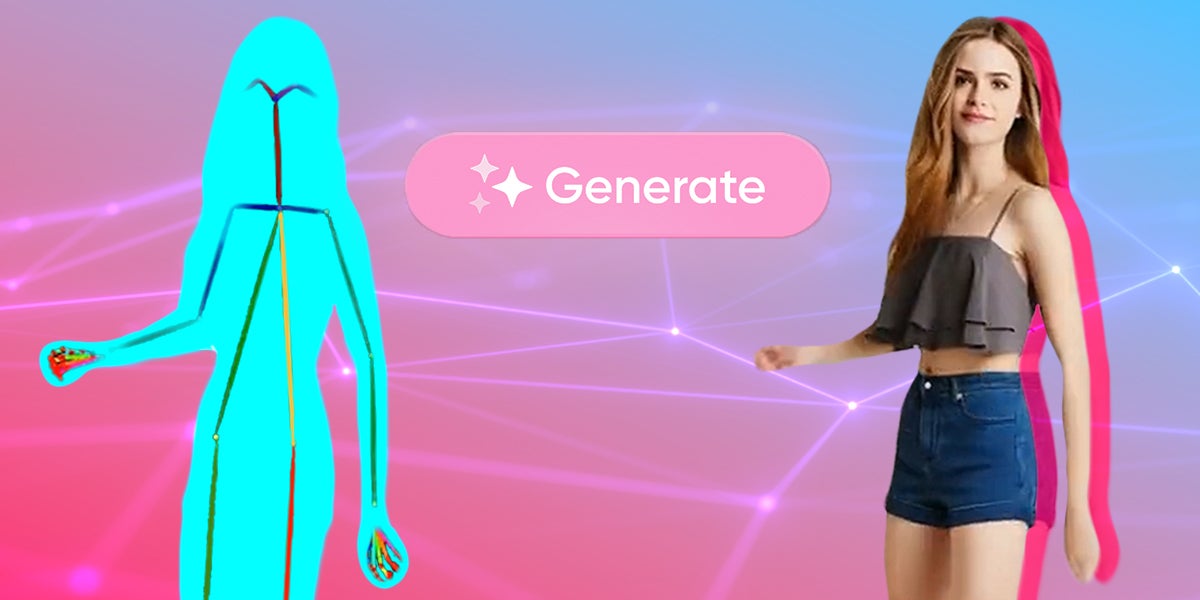

As the name implies, Animate Anyone is a software capable of turning any image into a video. In fact, researchers at Alibaba Group’s Institute for Intelligent Computing even used a TikTok dance as an example of how easy it could be to make things like completely virtual influencers.

But now that brands have that kind of software at their disposal, does it render influencers obsolete?

It’s true that brands might be attracted to AI in this regard because it means they’ll be able to have complete creative control over whatever content is created — but the whole point of influencers is to give things that human touch.

Audiences like the idea of someone real, tangible, and relatable, treating them as a friend who gives them advice on whether to buy the next big thing. And to be fair, we already have a completely virtual celebrity — remember Hatsune Miku?

The technology behind characters like Miku is nothing new. The Vocaloid software voicebank, for example, might have been a novelty when it was released in 2007, but fifteen years later, real musicians still exist. Music wasn’t entirely replaced by Vocaloids. The novelty might be fun, but we doubt robots will be taking over the world just yet.

That being said, this ability to turn pictures into videos becomes problematic when real people become involved. For example, a business could use someone’s likeness without their permission to promote things they never would IRL or put them in compromising positions.

It’s a kind of power that could turn really ugly if it falls into the wrong hands, and it also raises ethical concerns if a person’s likeness is used after their death or in deepfake pornographic material. If the difference between real and deepfake becomes harder to distinguish, it takes us to a very ugly place with some very ugly possibilities.

One thing that’s clear, at least, is that if AI continues to become part of influencer marketing, there needs to be some boundaries and regulations moving forward.